We have a new and exciting paper now published in the Proceedings of the National Academy of Sciences!

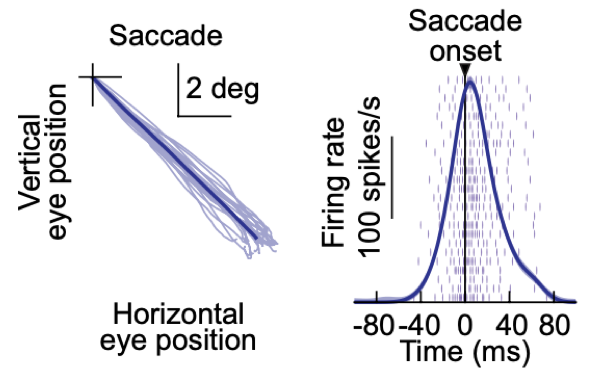

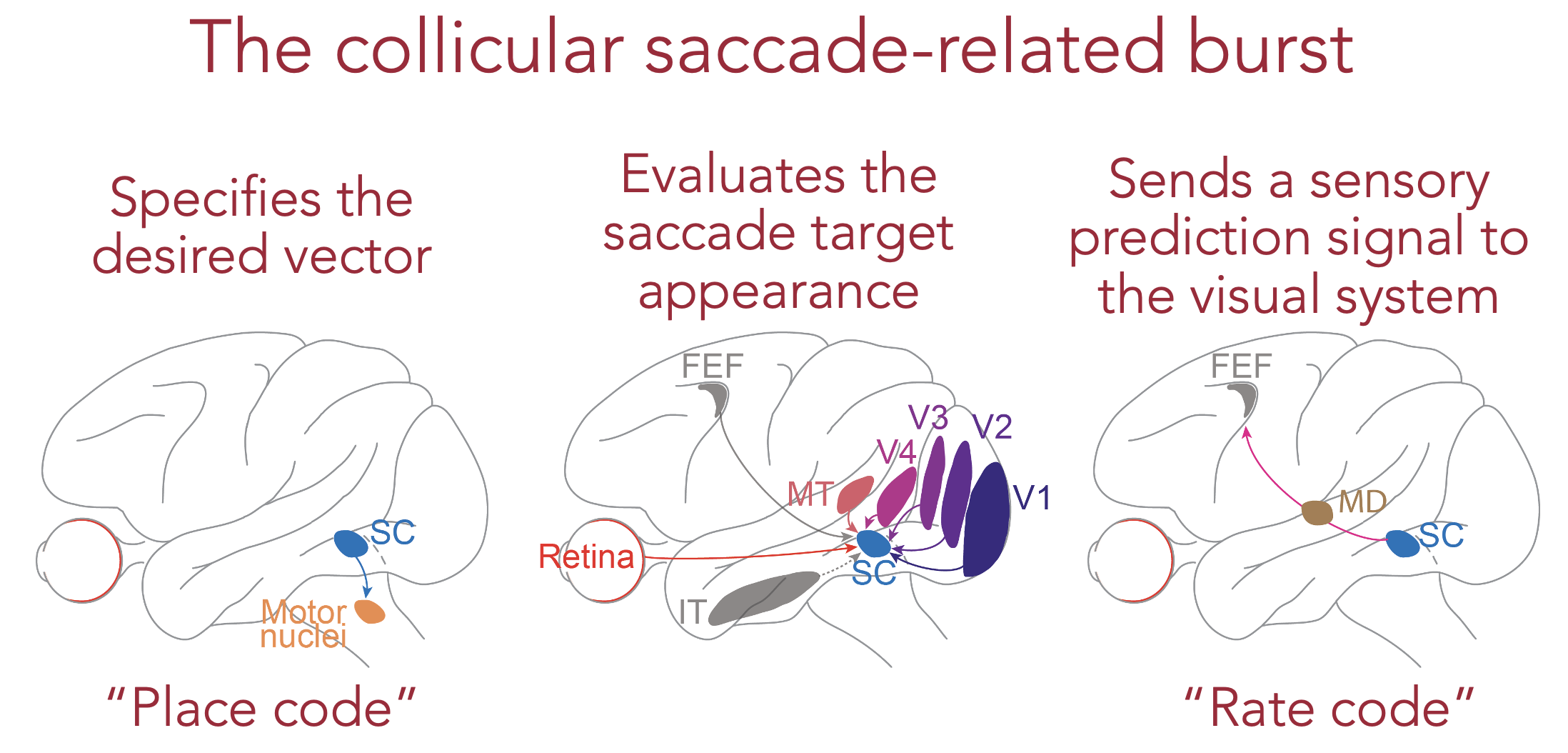

In this paper, we tackled one of the most classic notions in systems neuroscience: that superior colliculus (SC) neurons in the midbrain issue a motor command that triggers and controls the trajectories of rapid eye movements (called saccades). At the time of rapid eye movements, these SC neurons emit a strong burst of action potentials, the properties of which are classically thought to control movement dynamics and kinematics.

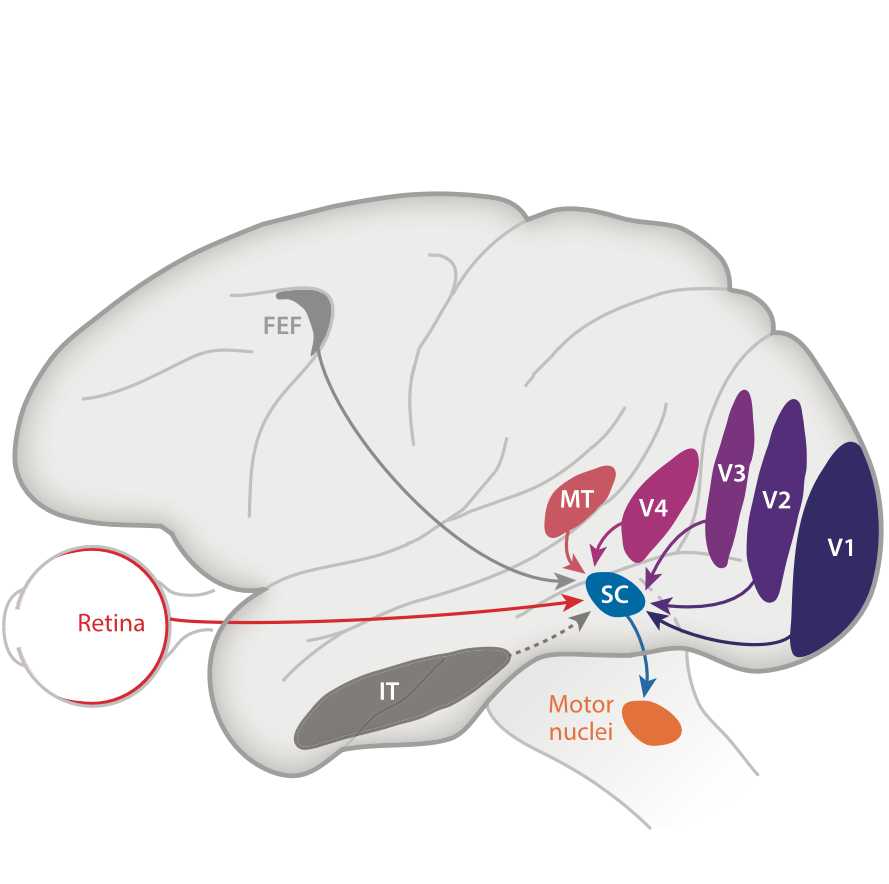

However, we know from lots of our prior work (e.g. here and here), as well as the work of others in the field, that the SC is additionally a highly visual structure (i.e. possessing sensory responses). Indeed, the SC integrates a lot of visual information about the environment from many sources, including higher level visual cortices.

We also know that rapid eye movements introduce disruptive motion blur and other uncertainties in retinal images (e.g. here), kind of like a shaky video camera on a mobile phone but with faster speeds. This creates a strong need in the visual system for a way to implement image stabilization mechanisms across saccades, especially because we generate these rapid eye movements more often than our heart normally beats.

In our work, we hypothesized that SC neurons are ideally suited to help in the image stabilization processes that are needed across rapid eye movements. That is, exactly while issuing eye movement commands, the very same activity can provide an internal source of knowledge for the rest of the brain about the visual appearance that the eye expects to see in the fovea at the end of the eye movement. The SC movement commands thus act as a sensory bridge across saccades.

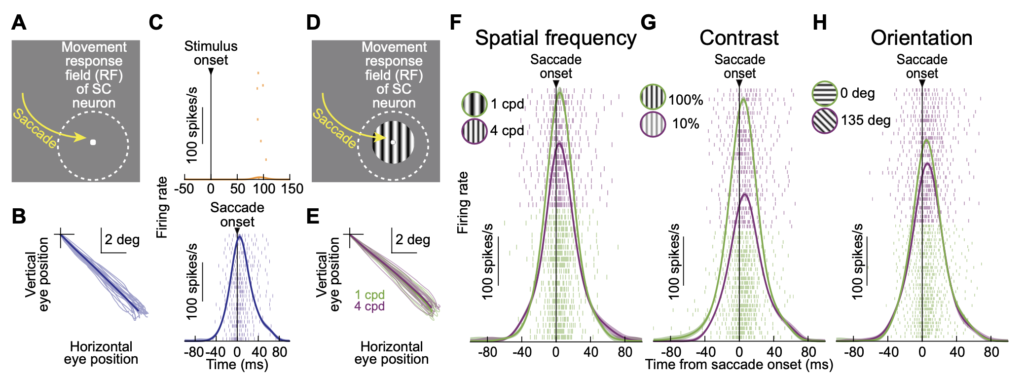

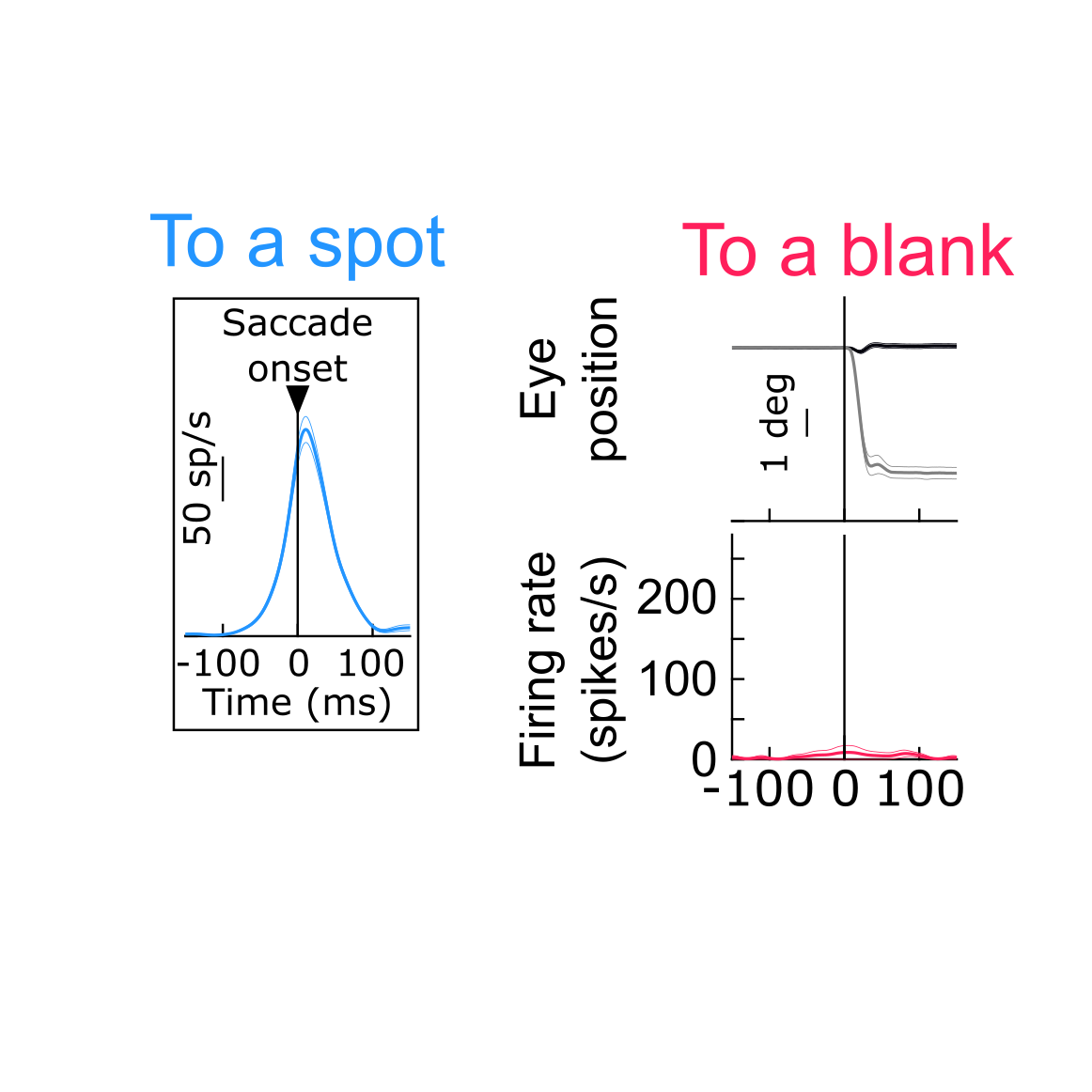

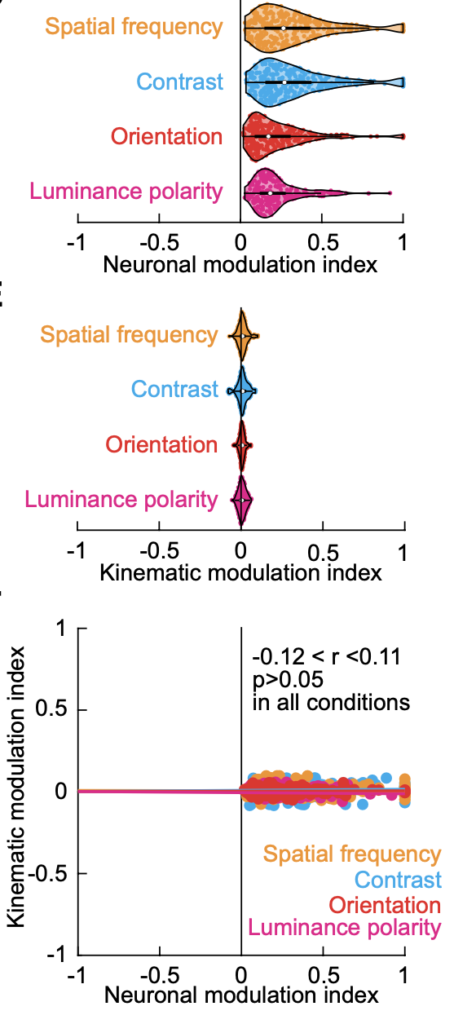

If so, then making the very same saccade to two different images should give rise to two different SC “movement commands”, even though the eye movement properties are the same. In other words, there should be “sensory tuning” in the movement commands. This is exactly what we found. In fact, in some cases, the movement command completely disappeared (e.g. if trying to look towards a blank as opposed to a visual target) even though the saccades were generated rather normally.

Critically, such (sometimes dramatic) changes in the SC motor commands for eye movements were not accompanied by concomitant changes in eye movement control properties (like dynamics and kinematics), suggesting that our results could not be trivially explained by classic interpretations of SC movement-related activity bursts.

Our observations in this study suggest that the SC movement commands provide one of the fastest ways with which the brain can implement image stabilization. Specifically, rather than waiting for around 50 ms after the end of each eye movement (which itself can take 50 ms from start to finish) to receive new visual information from the retina, the brain can now predict what it will see even before the eye movement has ended. Thus, just like a professional race car driver often describes “feeling the road traction conditions” through the gas pedal, SC movement commands that drive the gas pedal for rapid eye movements in the brain also allow the brain to “feel” the visual environment around it.

This work creates many new exciting avenues of research for the whole field, and we are actively pursuing many follow-up studies that are directly motivated by our intriguing findings in this study. So, stay tuned for more!