We have a new paper now published in the Journal of Neuroscience.

In this paper, co-authored by Amarender Bogadhi and Antimo Buonocore, and titled “Task-irrelevant visual forms facilitate covert and overt spatial selection”, we showed that images of coherent visual forms, such as faces or fruits, have a profound impact on target selection for eye movements, even when such visual forms are completely irrelevant to the current task at hand.

Whenever we want to “look” at a location in the scene around us, whether overtly by directing our gaze to that location or covertly by trying to pay attention to that location with our “mind’s eye”, the brain must solve the task of selecting that location from many other possible alternatives. There have been many studies of this so-called process of “target selection”.

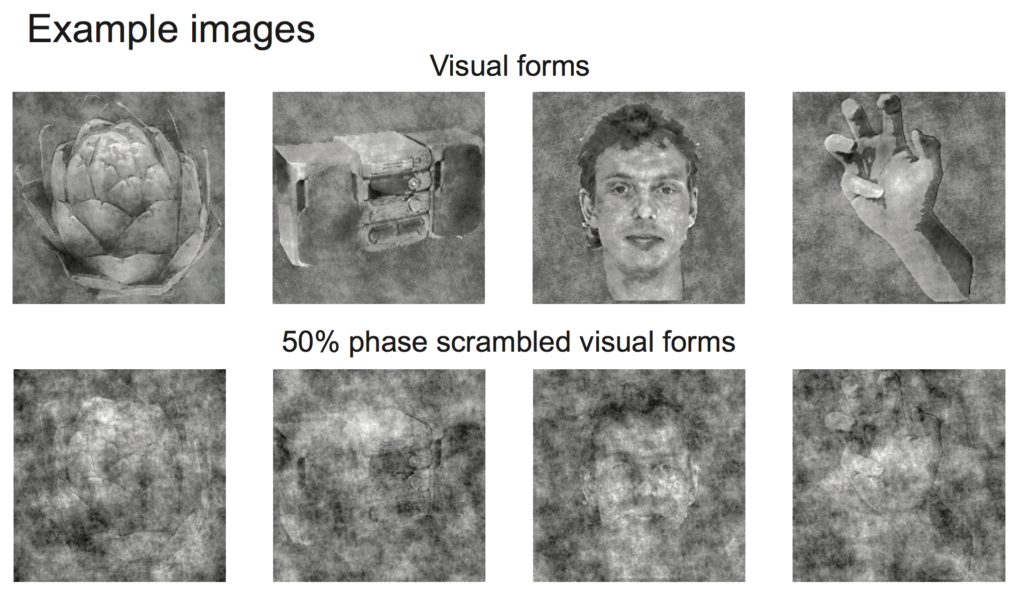

However, it remained still not fully clear whether target selection is facilitated by the brain having detected a coherent and recognizable visual object, as opposed to some luminance pattern that is not visually meaningful. In our study, we tested for this possibility. We had subjects select targets, either overtly with eye movements or covertly with their “mind’s eye”, and we placed images of visual forms either at the selected target locations or opposite them. Critically, the forms were not relevant for the target being selected in any way, and participants were told to ignore them. Yet, the forms had a strong impact on success in the task, as if participants could not help but select these forms. Images that had similar “pixel energy” as the visual forms but that had the pixels scrambled to take away the “objectness” of the forms were not as effective in modifying target selection performance.

These results are important to us because they demonstrate that brain circuits for target selection should have a possibility to rapidly detect visual objects. This fits with our recent observations that the superior colliculus in the brainstem might have rich visual processing capabilities that can potentially aid it in detecting coherent visual forms (e.g. here).

The paper can be read here.